The team of Cheng Jian researchers recently proposed a hash-based binary network training method, revealing the close relationship between the inner product hash and the binary weight network, indicating that the network parameter binarization can be transformed into Hash problem, on ResNet-18, this method is 3% better than the current best method.

In recent years, deep convolutional neural networks have penetrated into various tasks of computer vision, and have made major breakthroughs in the fields of image recognition, target tracking, and semantic segmentation. In some scenarios, the current deep convolutional network performance is sufficient for deployment into real-world applications, which also encourages people to apply deep learning to more applications.

However, the deep convolution network faces two problems, such as parameter quantity and time complexity, in actual deployment. On the one hand, the large amount of parameters of the deep network will occupy a large amount of hard disk storage and running memory, and these hardware resources are in some mobile and Embedded devices are often very limited; on the other hand, the computational complexity of deep networks is high, which makes network inference slow, and increases the power consumption of mobile devices.

In order to solve such problems, many network acceleration and compression methods have been proposed, in which network parameter binarization is a method of expressing network parameters as binary parameters. Since the parameters in the binary network are only +1 and -1, the multiplication operation can be replaced by the addition operation. Since multiplication requires more hardware resources and computational cycles than addition, the use of addition instead of multiplication can achieve network acceleration.

On the other hand, the storage format of the original network parameters is a 32-bit floating point number, and the binary parameter network uses only 1 bit to represent +1 or -1, achieving a compression effect of 32 times. However, the quantization of parameters from 32 bits to 1 bit will result in a large quantization loss. The current binary network training method tends to cause a large network accuracy to drop. How to learn the binary network parameters without bringing a large Decreasing accuracy is a problem.

Figure from the network

Hu Qinghao and others from the team of Cheng Jian, a researcher at the Institute of Automation, recently proposed a hash-based binary network training method, revealing the close relationship between the Inner Product Preserving Hashing and the binary weight network. Network parameter binarization can essentially be transformed into a hash problem.

Given trained full precision floating point 32-bit network parameters  The purpose of the binary weighted network (BWN) is to learn the binary network parameters.

The purpose of the binary weighted network (BWN) is to learn the binary network parameters.  And maintain the original network accuracy. Learning binary parameters

And maintain the original network accuracy. Learning binary parameters  The simplest way is to minimize

The simplest way is to minimize  Binary parameter

Binary parameter  Between the quantization error, but there is a certain gap between this quantization error and the network accuracy. Minimizing the quantization error does not directly improve the network accuracy, because the quantization error of each layer will accumulate layer by layer, and the quantization error will Affected by the increase in input data.

Between the quantization error, but there is a certain gap between this quantization error and the network accuracy. Minimizing the quantization error does not directly improve the network accuracy, because the quantization error of each layer will accumulate layer by layer, and the quantization error will Affected by the increase in input data.

A better learning binary parameter  The way is to minimize the difference in inner product similarity. Suppose the network input is

The way is to minimize the difference in inner product similarity. Suppose the network input is  ,

,  Is the original inner product similarity, then

Is the original inner product similarity, then  Is the inner product similarity after quantization, minimized

Is the inner product similarity after quantization, minimized  versus

versus  The error between the two can learn better binary parameters

The error between the two can learn better binary parameters  . From the perspective of Hash,

. From the perspective of Hash,  Represents the similarity or close relationship of data in primitive space,

Represents the similarity or close relationship of data in primitive space,  It represents the inner product similarity after the data is projected into the Hamming space. The role of the hash is to project the data into the Hamming space and maintain the neighboring relationship of the data in the original space in the Hamming space. At this point, learn the binary parameters

It represents the inner product similarity after the data is projected into the Hamming space. The role of the hash is to project the data into the Hamming space and maintain the neighboring relationship of the data in the original space in the Hamming space. At this point, learn the binary parameters  The problem is transformed into a hash problem with the inner product similarity. The hash is mainly to project the data into the Hamming space and maintain its inner product similarity in the original space.

The problem is transformed into a hash problem with the inner product similarity. The hash is mainly to project the data into the Hamming space and maintain its inner product similarity in the original space.

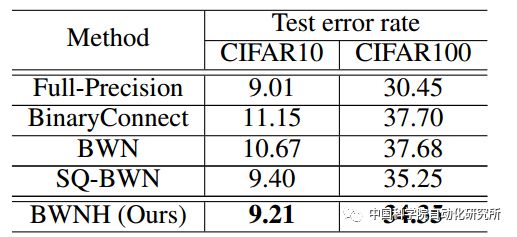

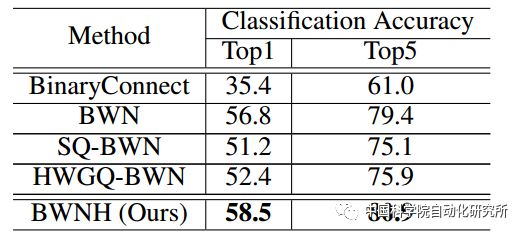

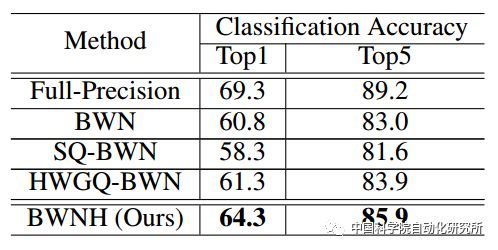

The team first verified the method on the VGG9 small network and exceeded the current binary weight network on AlexNet and ResNet-18. In particular, on ResNet-18, this method is 3 percentage points better than the current best method. Good experimental results were obtained.

Table 1: Classification error rate of different methods on VGG9

Table 2: Classification accuracy of different methods in AlexNet

Table 3: Classification accuracy of different methods in ResNet-18

Dasgupta et al. [2] wrote in the November 2017 issue of Science that the fruit olfactory neural circuit is actually a special hash whose hash projection is a sparse binary connection. Comparing the binary weight network (BWN), we can find that there is a close relationship between the two. First, the networks of the two are binary connections, which means that the binary weights have a biological basis in the biological neural circuit. This also provides an inspiration for the potential mechanism of the binary weighted network. Secondly, both are to maintain the neighbor relationship and can be described as a hash problem, which indicates that some connections of the neural network are to maintain the neighbor relationship. Finally, the sparse connections in the olfactory loop of the fruit fly and the parameter sharing mechanism of the convolutional layer have similarities and differences, and all of the input regions are connected.

Related work has been received by AAAI2018 [1] and will be presented orally at the conference.

Reference materials:

[1] Qinghao Hu, Peisong Wang, Jian Cheng. From Hashing to CNNs: Training Binary Weight Networks via Hashing. AAAI 2018

[2] Dasgupta S, Stevens CF, Navlakha S. A neural algorithm for a fundamental computing problem. Science, 2017, 358(6364): 793-796.

ZGAR MINI

ZGAR electronic cigarette uses high-tech R&D, food grade disposable pod device and high-quality raw material. All package designs are Original IP. Our designer team is from Hong Kong. We have very high requirements for product quality, flavors taste and packaging design. The E-liquid is imported, materials are food grade, and assembly plant is medical-grade dust-free workshops.

From production to packaging, the whole system of tracking, efficient and orderly process, achieving daily efficient output. We pay attention to the details of each process control. The first class dust-free production workshop has passed the GMP food and drug production standard certification, ensuring quality and safety. We choose the products with a traceability system, which can not only effectively track and trace all kinds of data, but also ensure good product quality.

We offer best price, high quality Vape Device, E-Cigarette Vape Pen, Disposable Device Vape,Vape Pen Atomizer, Electronic cigarette to all over the world.

Much Better Vaping Experience!

ZGAR Vape Pen,Disposable Device Vape Pen,UK ZGAR MINI Wholesale,ZGAR MINI Disposable E-Cigarette OEM Vape Pen,ODM/OEM electronic cigarette,ZGAR Mini Device

Zgar International (M) SDN BHD , https://www.szvape-pen.com