This year, there were more and more people in Kettsla, and autopilot caused more and more accidents. Xiao Bian couldn't stand it and added this article over the weekend. Just yesterday, the media blew up Tesla employees to sleep while testing driving (whether it is really sleeping yet to be confirmed). Last month, a Tesla crashed into a police car. Within a few days, another one entered the river. The NTSB also gave an initial review of the fatal accident in March this year.

The accident went one by one. There are actually mainstream media saying "Self-driving cars will kill people and we need to accept that ...". The autopilots are really helpless. We are doing this to save more people's lives. We will never sacrifice anyone’s lives in the short term for long-term safety goals. The public must not confuse assisted driving with automatic driving. If driving assistance is not safe enough, it should not be used for consumers.

Test Tesla employees sleeping while driving

Driver assisted driving

Assisted driving is only auxiliary and cannot replace human driving. Assisted driving is only responsible for blind spot monitoring, collision avoidance, emergency braking, assisted parking, adaptive cruise control, lane assistance, and traffic jam assistance. According to the state law of Florida, no matter how these functions are combined and matched, as long as the system is still inseparable from the driver's own monitoring, it cannot be called an autonomous vehicle. Unfortunately, most consumers have always mistakenly thought that buying a home-aided driver is automatic driving.

In many U.S. states, the law clearly states that self-driving cars and cars with assisted driving functions are completely different types. If a problem arises in a self-driving car that leads to a car accident, it is of course the manufacturer's problem. However, if there is a problem with the assisted driving function, the responsibility is entirely on the owner himself, and it has nothing to do with the car dealer.

Most assisted vehicles cannot detect fixed objects at high speed

You read it right. Most of the assisted driving functions, including Tesla's autopilot, are capable of detecting no fixed objects if they reach 80 kilometers per hour. This is why so many Tesla hit a police car and a firetruck on the highway. Too many people mistakenly believe that Tesla is a reliable brand, so at a high speed, detecting a very obvious object such as a police car is justified. However, the premise of assistive driving is that there are no fixed obstacles at high speeds, and all vehicles must be in their own lanes or must be in a moving state.

Let's take a look at Tesla Model 3's security manual (Model S is exactly the same as Model 3). Rough translation: The traffic-aware cruise system is not detectable by all objects. For fixed objects and vehicles, especially when the speed reaches 50mph (80km/h), or if the current car leaves your lane, the front is fixed. When an object is in motion, the system may not brake or slow down. Drivers need to always pay attention to the road ahead, always ready to immediately correct car behavior. Full reliance on this cruise system is likely to result in serious injury or even death. In addition, the cruise system is inexplicably decelerating because of a car that does not exist or a car outside the lane.

In Xiaobian's 101 high-speed daily commuting, the speed can easily reach 75mph. There are often other car accidents in the middle of the road, or there are police cars parked, and often the car suddenly leaves the driveway quickly, and the car behind it only finds out again. The car is completely still, causing it to brake. In other words, there are too many situations where autopilot will fail.

The following is the Mercedes-Benz S Class safety manual. Throughout the entire manual page 548, finding the following page is a waste of small series for a few minutes. Rough translation: The brake system can detect fixed obstacles only at 40 mph (70km/h), such as non-moving or docked cars. caveat! If you do not receive a visual or audible warning, the brake system is likely to: not detect the risk of a crash; the brake system is turned off; the vehicle system is malfunctioning.

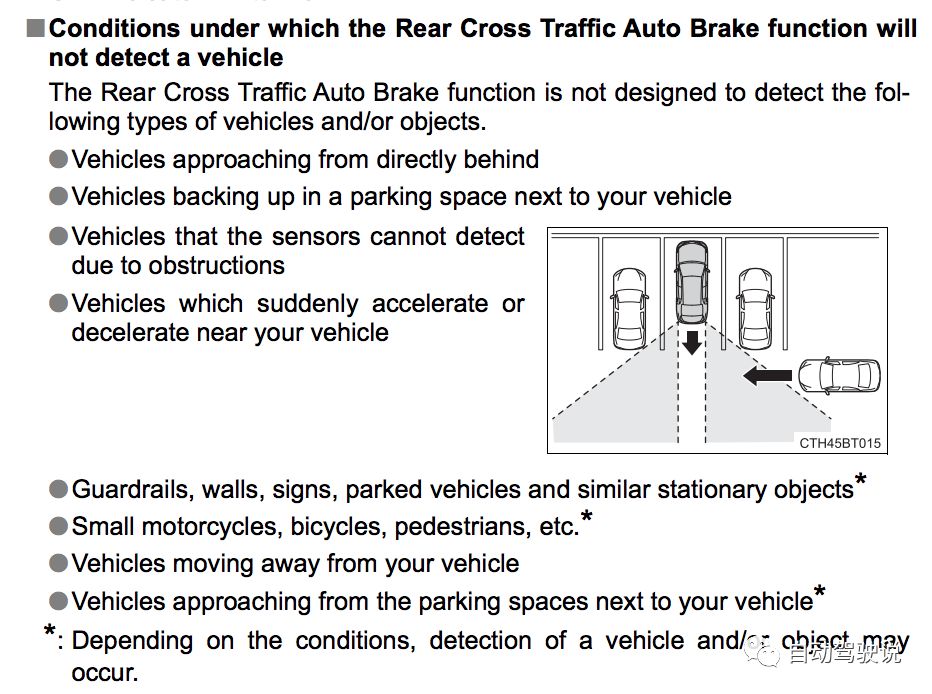

Look again at Toyota's safety manual. The entire manual contains 612 pages, many of which mention that it is impossible to detect fixed objects. There are many other limitations. Xiao Bian does not translate one by one. (BSM refers to the blind spot monitor, which is the blind spot monitoring function.)

The following is a small series of suggestions for everyone.

If you have bought a car with assisted driving function: Please be sure to read the safety manual given to you by the manufacturer! Do not believe what sales tell you. Of course, the car will not give you the disadvantages of the car, or who will buy? Take the time to figure it out and under what circumstances driver assistance will fail. Even Cadillac, which is a good driver for assisted driving, stipulates that some of the highways are not suitable for assisted driving. These details can only be understood by oneself, do not mistakenly think that you need not worry about spending money.

Dear Auto Factory Managers: Remember that when designing a product, you can't ask customers to read the safety manual, and you can't push the responsibility to the customer. A few hundred pages of safety manuals, who will read it? If the product is not safe enough, it cannot be obtained at all in the market.

Self-driving colleagues: Please be sure to think clearly about the application scenario. If you can not guarantee the safety of high-speed driving, do not greedy to high speed. If you can't guarantee driving at night or on a rainy day, don't try to arrange for testing at night or on a rainy day. This is not responsible for the testers. It is also not responsible for testing other vehicles and pedestrians on the road.

Engineers: Speak out loud when you see something wrong. If you think that there is a security problem in which part, don't be afraid to say it because this problem is likely to cause a passenger or a pedestrian to be injured later. Do the right thing. Specific methods can refer to this article.

Company CEOs: When recruiting people, the company must pay attention to diversity! Don't just dig people from some big companies. The background of large company engineers is generally assisted driving, not automatic driving. Be sure to balance the background of your employees. The decisions made in this way won't be one-sided.

Xiao Bian looked at the home page of Tesla autopilot, only to find Tesla actually advertised "full-automatic driving." Is it really okay to deceive consumers like this? Moreover, if only hardware can autopilot, can it also call "full" autopilot?

The same is true on Chinese websites.

In contrast, the official website of Mercedes-Benz only said "smart driving."

CL-2H Copper Connecting Terminals

Our company specializes in the production and sales of all kinds of terminals, copper terminals, nose wire ears, cold pressed terminals, copper joints, but also according to customer requirements for customization and production, our raw materials are produced and sold by ourselves, we have their own raw materials processing plant, high purity T2 copper, quality and quantity, come to me to order it!

CL-2H Copper Connecting Terminals

Taixing Longyi Terminals Co.,Ltd. , https://www.longyicopperlugs.com