Recently, Berkeley University released the BDD100K, the largest and most diverse set of driving video data currently available. These data have four main characteristics: large-scale, diverse, and collected in real streets with time information. The diversity of data is particularly important for testing the robustness of sensing algorithms. Using this data set, you can also participate in the Berkley Automated Driving Contest at CVPR 2018, which also published related introductory articles on arXiv.

BDD100K: A Large-scale Diverse Driving Video Database

Berkeley University has released the BDD100K, which is currently the largest and most diverse driving video data set, which contains a wealth of various annotation information.

Paper: BDD100K: A Diverse Driving Video Database with Scalable Annotation Tooling

Massive, Diversified, Driving, Video: Pick Four

Autopilot is expected to change everyone’s life. However, the recent series of automatic driving accidents shows that it is unclear how a man-made driving awareness system can avoid apparent errors from humans. As a computer vision researcher, BAIR is interested in exploring the most advanced automatic driver perception algorithms to make it more secure. In order to design and test a potential algorithm, BAIR hopes to use all the information from the data collected from the real driving platform. These data have four main characteristics: large-scale, diversified, collected in real streets, with time information. The diversity of data is particularly important for testing the robustness of sensing algorithms. However, the current open dataset can only cover a subset of the above attributes. Therefore, with the help of Nexar [4], BAIR released the BDD100K database, which is by far the largest and most diverse open driving video dataset in computer vision research. The project is organized and sponsored by the Berkeley DeepDrive [5] Industry Alliance, which is responsible for studying the latest technologies in computer vision and automotive application machine learning.

Figure: Location of a random video subset.

As the name suggests, BAIR's data set contains 100,000 videos. Each video is about 40 seconds long, 720p, 30 fps. The video also comes with GPS/IMU information recorded by the phone to show rough driving trajectories. Our video is collected from all over the United States, as shown in the picture. BAIR's database covers different weather conditions, including sunny, cloudy and rainy days, as well as different times of day and night. The following table summarizes the comparison with the previous data set, which shows that BAIR's data set is larger and more diverse.

Figure: Comparison with some other Street View scene datasets. It is difficult to compare #images between datasets fairly, but we list them here as a rough reference.

These videos and their trajectories can help us imitate learning driving regulations, as described by BAIR in CVPR 2017 paper [6]. In order to facilitate computer vision research on large-scale data sets, BAIR has also made basic annotations on video key frames. See the next section for details. You can now download data and annotations in [1].

Annotations

We sample a keyframe at the 10th of each video and provide annotations for these keyframes. They are marked on multiple levels: image tags, road object bounding boxes, driveable regions, lane markers, and full-frame instance segments. These annotations will help us understand the diversity of data and object statistics in different types of scenes. We will discuss the labeling process in another blog post. More information on labeling can be found in BAIR's arXiv paper [2].

Figure: Overview of BAIR callout information

Road Object Detection

BAIR labels objects on all 100,000 keyframes that often appear on the road with object bounding boxes to understand the distribution and location of objects. The bar chart below shows the object count. There are other ways to use statistics in our annotations. For example, we can compare the number of objects under different weather conditions or different types of scenes. The chart also shows the different object sets that appear in the BAIR data set, as well as the size of the data set - over one million cars. Here should remind the reader that these are different objects, with different appearances and backgrounds.

Figure: Statistics of different types of objects.

The BAIR dataset is also suitable for researching specific areas. For example, if you are interested in detecting and avoiding pedestrians on the street, you also have reason to study the BAIR dataset because it contains more pedestrian instances than the previous professional dataset, as shown in the following table.

Figure: Comparison of training set sizes with other pedestrian data sets.

Lane Markings

Lane markings are important road indications for human drivers. When GPS or maps are not accurately covered globally, they are also a key clue to driving direction and positioning of the autopilot system. According to how the lane indicates the vehicle, we divide the lane marking into two types. The vertical lane marking (marked in red in the figure below) indicates the marking along the lane. The parallel lane marking (marked in blue in the figure below) indicates that the vehicle in the lane needs to stop. BAIR also provides tagged attributes such as solid and dashed lines as well as double and single layers.

If you are ready to try using your lane marking prediction algorithm, please wait and see. The following is a comparison with an existing lane marker data set.

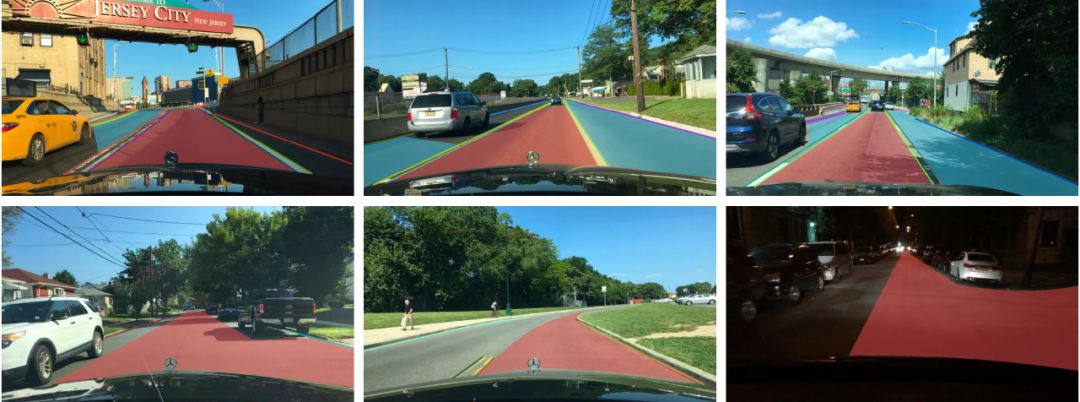

Drivable Areas

Whether we can drive on the road depends not only on lane markings and traffic equipment, it also depends on the complex interaction with other objects on the road. Finally, it is important to understand which area can drive. In order to study this issue, we also provide a segmentation of the drivable area, as shown below. We divide the drivable area into two categories based on the trajectory of the self-vehicle: Directly drivable and alternative drivable. Directly drivable, marked in red, means that the self-vehicle has road priority and can continue driving in that area. Another kind of drivable, marked in blue, means that the self-vehicle can drive in this area, but care must be taken because the road priority may belong to other vehicles.

Full-frame Segmentation

In the Cityscapes dataset, it has been shown that full-frame fine-grained segmentation can greatly enhance the research of intensive prediction and target detection, which is a widely used pillar for computer vision. Since our video is in different areas, we also provide instance split labels to compare the relative position offsets of different data sets. To obtain full pixel-level segmentation can be very expensive and laborious. Fortunately, with our own labeling tools, labeling costs can be reduced by 50%. Finally, we perform full-frame instance segmentation on a subset of 10K images. The BAIR tag set is compatible with training tags in Cityscapes to facilitate the study of domain conversions between data sets.

Driving Challenges

BAIR will host three challenges [7] in the CVPR2018 autopilot workshop [7]: road target detection, field adaptation for driving area prediction and semantic segmentation. The inspection task requires that your algorithm finds all of the target objects in our test image, while the pilotable area prediction needs to subdivide the area where the car can drive. In domain adaptation, test data is collected in China. So the system will be challenged to make the model adapted to the United States work in the crowded streets of Beijing, China. You can submit the results as soon as you log in to [8]BAIR's online submission portal. Be sure to check out BAIR's Toolkit [9] to start your participation.

future career

Self-driving awareness systems are by no means limited to monocular video. It may also include panoramic and stereo video, as well as other types of sensors such as LiDAR and radar. BAIR hopes to provide data sets for these multimodal sensors in the near future.

The present invention provides a method for controlling the temperature of a flue-cured electronic cigarette and a flue-cured electronic cigarette. The flue-cured electronic cigarette includes an N-section heating body, where N is an integer greater than 1, and the heating body is used for heating tobacco. The method for controlling the temperature of the flue-cured electronic cigarette includes: the flue-cured electronic cigarette heats the i-th heating body, and i is an integer greater than 0 and less than N; after the first preset time, the flue-cured electronic cigarette pairs the i+ The first stage heating body is heated; after the second preset time, the flue-cured electronic cigarette stops heating the i-th stage heating body, and continues to heat the i+1th stage heating body. The technical solution solves the problem of unbalanced smoke output of flue-cured electronic cigarettes during multi-stage heating.The present invention provides a method for controlling the temperature of a flue-cured electronic cigarette and a flue-cured electronic cigarette. The flue-cured electronic cigarette includes an N-section heating body, where N is an integer greater than 1, and the heating body is used for heating tobacco. The method for controlling the temperature of the flue-cured electronic cigarette includes: the flue-cured electronic cigarette heats the i-th heating body, and i is an integer greater than 0 and less than N; after the first preset time, the flue-cured electronic cigarette pairs the i+ The first stage heating body is heated; after the second preset time, the flue-cured electronic cigarette stops heating the i-th stage heating body, and continues to heat the i+1th stage heating body. The technical solution solves the problem of unbalanced smoke output of flue-cured electronic cigarettes during multi-stage heating.

E-Cigarette Starter Kits,small e cigarette starter kit,mini e cigarette starter kit,e cig starter kit near me,cheapest e cigarette starter kit, e cigarette starter kits,electronic cigarette starter kits

Suizhou simi intelligent technology development co., LTD , https://www.msmvape.com