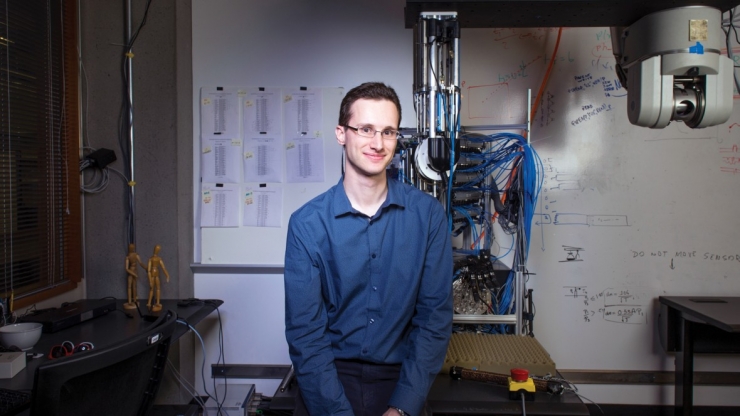

On August 23, US time, MIT Technology Review released the 16th TR35 list, a list of 35 young innovators in the world under 35 years of age. Elites from all walks of life are leaders in terms of creativity, perseverance, and management capabilities. Their fields of innovation involve healthcare, energy, computers, and advanced electronic devices; their careers include start-up companies, R&D institutions, and corporate giants. They are leaders in their respective fields. Sergey Levine, a robotics expert at the University of California, Berkeley, is one of them.

In March of this year, the Go artificial man-machine battle, Google artificial intelligence AlphaGO defeated the world's best Go player Li Shishi, let people deeply lament the power of today's artificial intelligence. At the time, Sergey Levine was working at Google. During this nine-month working period, he witnessed the victory of artificial intelligence. While admiring AlphaGo's achievements in the field of machine learning, he discovered a significant flaw in this powerful Go game algorithm.

He laughed and said:

Even though they (programs) can beat the best Go players in the world, they never personally picked up a pawn.

As we all know, robots have powerful brains, they are smart enough, they can run fast, and they can accomplish tasks that humans cannot accomplish. However, they also have a disadvantage: Some of the most common and simplest movements that appear to humans (such as wiping tables, grabbing cups, etc.) are very difficult to do. Therefore, they have to successfully complete these tasks and learning ability is very important.

What Sergey Levine is doing is teaching robots to learn.

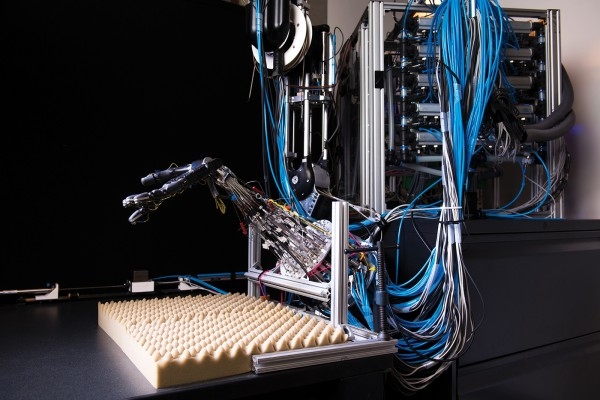

Let the robot learn from experience and self-taughtIn the Google Institute, 14 robots put their arms together, picking up different items for months, heavy, light, flat, large, small, hard, soft, and translucent. . This is exactly what the Sergey Levine team is working on.

Picking up items day in and day out sounds stupid, but Sergey Levine thinks this method is unique and significant. To teach a robot to perform an action, the traditional method is to let the robot identify and distinguish different objects first, and then through repeated training, finally achieve the goal. This method works well when teaching robots to perform simple tasks (such as screwing on caps), but this training process is long and boring. In addition, in the face of complex tasks, the effect of this method is not obvious.

The method of Sergey Levine's team is completely different from the traditional method. The key point is that the image recognition classification algorithm, which is already very outstanding, is applied to the robot arm so that the robot can learn from previous successful experience.

First, Levine sets some goals that can be easily solved on the robot arm (such as screwing on caps). After completion, the robot arm can review the previous successful cases and learn from them to complete the tasks in the future. At the same time, the robot will observe how the vision system data maps to the motor signal of the robot arm to perform the task correctly. In addition, the robot will supervise its own learning process.

The reason why these 14 robots pick up different items day after day is to allow them to learn different things from different experiences and thus use experience on other items. Levine said:

This is a reverse engineering of the machine's own behavior. In this way, it can apply the learned knowledge to subsequent related tasks, so that the robot will become more and more intelligent.

In fact, it is very complicated to teach robots to pick up different objects, because there is no obvious necessary link between sensor data and actual actions, especially when a large number of sensor data come in at once.

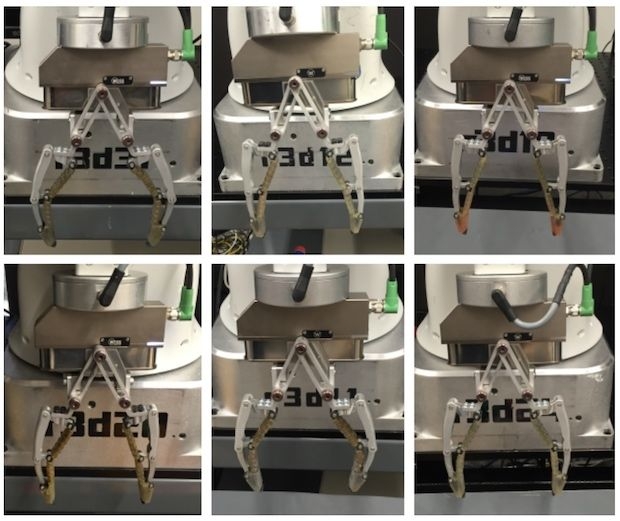

To overcome this, the Sergey Levine team let the robotic arm use monocular visual servoing and deep convolutional neural networks to pick up targets (such as cups, tape dispensers, and toy dolphins) to predict the results of the capture. The robot's degree convolutional neural network will continue to self-retrain (there are many failures at first, but gradually start to improve). At the beginning, the team did not use so many robotic arms. In order to speed up this process, the team invested 14 robotic arms in parallel to study this issue. This series of processes is completely autonomous. All humans need to do is fill up the boxes and turn on the power, waiting for the robot to complete the task.

After a year of training, the arm can grab and pick up small objects. However, the programming information of the robot arm is usually used to identify the object and respond according to a predetermined program, and cannot be changed according to the surrounding environment like humans. Then the question arises: For predictable objects and environments, robotic arms can easily handle, but can they capture objects that have never been seen before?

In order to explore this, Sergey Levine allowed the robot arm to extend into a randomly filled box and let them draw random objects with luck. After a day, he collected data from robots trying to grab objects, and then used these data to train the neural network so that the result of grabbing the objects was better. After 800,000 (equivalent to 3,000 hours of robot training) crawling operations, the robotic arm can automatically correct its actions. Soon, they can grab the object more smoothly, and even use some tactics, such as pushing an object to grab another object, or grab a soft object instead of a hard object.

During the robotic arm's completion of all these tasks, no programmer wrote the system to tell them how to grab objects, but they could learn from their own experience. In addition, they can also use a feedback loop to reduce the failure rate of grabbing objects to 18%.

Now, the robots studied by Sergey Levine have been trained to “grab thingsâ€, but to get from the lab to the real world, they can easily cope with changing environments, different targets, different lighting conditions and different degrees. In terms of wear, these robotic arms also require a "tune-up period" for a long time.

At present, Sergey Levine intends to extend their research to a wider area and then try it in a variety of real environments outside the laboratory. We look forward to these robots being able to "learn from them" and become more intelligent and more "soul" robots.

Xinzhi created the public number of the robot under the leadership of Lei Feng Network (search for "Lei Feng Net" public number) . We are concerned about the status quo and future of robots, and related industries combined with robots. Interested friends can add micro signal AIRobotics, or directly scan the QR code below to follow!