High-Performance and Multi-Core Processor Summary As more and more mobile handsets support video capabilities, the need for network support for streaming content and real-time communications is also increasing. While upgrading a deployed 3G media gateway can support lower resolutions and frame rates, this upgrade due to its limited processing power does not meet the needs of video becoming a mainstream application.

This article refers to the address: http://

In order to enable scalable video applications to support high-density (HD), significant video processing capabilities are required, and multi-core digital signal processors (DSPs) not only have enhanced video processing capabilities to meet these needs, but also fully operational. The need for scalability and low power consumption.

This article is intended to introduce a new multi-core platform that enables high-density video processing by optimizing kernel communication, task management, and memory access. In addition, this article describes how the results of the extended implementation support multi-channel and multi-core HD. High-density video processing for video applications.

table of Contents

1 Introduction.............................................................................................2

2 Challenges for basic office HD video..................................................................2

2.1 External I/O Interface....................................................................................4

2.2 Processing performance....................................................................................5

2.3 Memory Design Considerations........................................................................6

2.4 Multi-core collaboration and synchronization..............................................................................7

2.5 Multi-chip system....................................................................................7

3. KeyStone DSP - TI's latest multi-core processor......................................................8

Table of Contents Table 1 KeyStone DSP and Video Requirements........................................................................8

Figure Catalog Figure 1 Video communication over the network..................................................................3

Figure 2 KeyStone DSP block diagram..................................................................8

The trademarks C64x+ and C67x are trademarks of Texas Instruments.

All other trademarks are the property of their respective owners.

1 Introduction

1 Introduction

The widespread deployment of 3G and 4G mobile networks around the world and the emergence of wireless innovation hotspots have spawned the critical data bandwidth required by handheld end users. In addition to web/data applications, video has become another driving force for the spread of mobile data.

As more and more users switch to video applications, the network infrastructure needs to achieve significant performance improvements to support video content, as evidenced by the recent popularity of Apple's Facetime video calling applications and similar applications.

Handheld terminals support video capture and playback at higher resolutions and frame rates. Traditionally deployed 3G media gateways are designed to support high-density, multi-voice channels in low-resolution video applications, but often do not meet user expectations for high quality.

In addition, due to technical limitations such as battery life and memory size, handheld terminals can only support several standards with a limited set of parameters, so the media gateway needs to support more codecs and transcoding modes, such as transcoding, The amount of transmission and the transmission rate, etc. For example, when a mobile phone user drives a car at a high speed, it allows the network to adapt to the bandwidth provided by the instantaneous link conditions at that time and provides corresponding compression, resolution and bit rate more efficiently, so that the video chain is not interrupted, and Handheld terminals also do not waste bandwidth or battery power by supporting scaling or editing.

In order to fully meet these needs, we need to significantly improve the video processing capabilities of high-density media gateways. Multi-core DSPs provide scalable solutions at lower operating costs, providing a comprehensive solution to the power and space issues that operators focus on.

The organization of this paper is as follows:

First, it addresses the challenges and resource requirements for processing high-resolution video and how to effectively implement video encoding algorithms with scalable implementation capabilities to support both low-resolution and high-resolution channels.

Second, it also discusses how software and hardware options can improve the efficiency of multi-core operations.

Finally, this article provides a retrospective introduction to the evolution of cutting-edge technologies in the field of multicore DSPs and explores the platforms that developers can leverage.

2 Challenges for basic office HD video

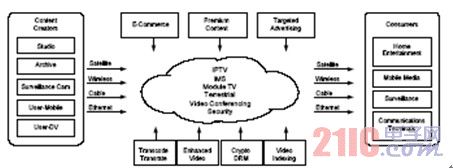

Figure 1 depicts a video communication system based on a base office network. A typical system should support multiple functions, including:

• Code conversion and rate adaptation for high-density media • Audio transcoding associated with video transcoding • Large multi-party video conferencing • Processing in other media formats such as voice

Figure 1 Network-based video communication

Transcoding is a typical communication infrastructure video application where we can decode YUV-domain video streams through compressed input streams, then use different standards (transcoding), different bit rates (streaming) Rate), resolution (transmission size), or any combination of the above is re-encoded. From high-quality HD professional cameras to low-resolution smartphone recordings, video content comes from a wide range of sources; video content receivers range from large HDTV screens to low-resolution handheld terminals. The video infrastructure must meet a variety of needs, including:

• Multiple encoding and decoding standards such as DV, MPEG2/4, H.264, and future H.265.

• Multiple resolutions and frame rates, from 128x96 pixels to 1/4 of the Common Medium Resolution Format (SQCIF) or even lower resolution, to HD (1920x1080) or even Ultra HD (4320P, 8K), from each 10 frames per second to 60 frames per second.

• Various coded input/output (I/O) bit rates, from 48 Kbps for low-resolution, low-quality handheld video streams to professional-quality 50 Mbps (H.264-level 4.2) or higher. The bandwidth requirements of YUV-domain video streams are very high. For example, a YUV 1080p60 video stream with a 4:2:0 color scheme requires about 1.5 Gbps of bandwidth.

Delay requirements vary by application: video conferencing and real-time gaming applications require very strict latency, no more than 100 milliseconds; video-on-demand applications can accept medium latency (up to a few seconds), and processing such as storage for non-real-time applications can Allow longer delays.

The challenge for a communications infrastructure network is how to deliver all content to all required users while maintaining high utilization and efficiency of hardware resources. To further illustrate this challenge, consider the fact that the processing load required for a single 1080i60 channel is 1/4 of the common resolution of 164 frame rates of 15 fps (assuming a linear relationship between load and resolution and number of frames). The rate format (QCIF) has the same channel. Therefore, hardware supporting a single 1080i60 channel should also be able to support 164 QCIF channels with equal efficiency and high utilization. However, scalability that is implemented in this order of magnitude is a major challenge.

In order to meet high scalability requirements, a programmable hardware solution must be used. Some video applications require processors with very high bit rate signal inputs and outputs, so processor-based solutions must have ideal peripherals that support enough external interfaces. This processor must have enough processing power to handle real-time, high-definition, high-quality video, while also providing enough native resources such as fast memory, internal bus, and DMA support to make the processor's processing power efficient. use.

Single-core DSPs are highly flexible and can efficiently execute a variety of algorithms. DSP can handle not only voice, audio, video, but also other functions. However, due to the lack of computing power of a single-core DSP, it is not free to process real-time video of any resolution, but only low-resolution video such as SQCIF, QCIF, and Common Medium Resolution Format (CIF); The power consumption of the core DSP also makes it unusable for high-density video processing systems.

The new multi-core DSPs have very high processing power and consume less power per run than single-core DSPs. In order to determine whether a multi-core processor can be an effective hardware solution for the communication infrastructure, it needs to verify its interface, processing performance, memory requirements, and multi-core cooperation and synchronization mechanisms for various use cases. .

2.1 External I/O Interface The bitstream of a typical transcoding application is packaged in the form of an IP packet. The bandwidth required for transcoding applications is related to the resolution and the available bandwidth of the network required by the user. The following are the common bandwidth requirements listed for a single-channel consumer quality H.264 encoded video stream as a resolution function:

• HD resolution, 720p or 1080i - 6 to 10 Mbps

• D1 resolution, 720 x 480, 30 frames per second (fps), or 720 x 576, 25 frames per second – 1 to 3 Mbps

• CIF resolution, 352×288, 30 frames/sec – 300 to 768 Kbps

• QCIF resolution, 176 × 144, 15 frames / sec – 64 to 256 Kbps

The total external interface required for the transcoding application is the sum of the bandwidth required for the input media stream and the output media stream. To support multiple HD resolution channels or a large number of lower resolution channels, at least one serial Gigabit Media Independent Interface (SGMII) is required.

Non-transcoded video applications involve encoding or decoding raw video media streams from YUV (or equivalent) domains. The original video stream has a high bit rate and typically inputs or outputs signals directly from the processor through a high bit rate fast multichannel bus such as PCI, PCI Express or Serial Fast Input/Output (SRIO).

The following is a list of the bandwidth required to transfer a single-channel raw video stream in a YUV domain using 8-bit pixel data and a 4:2:0 or 4:1:1 color scheme:

• 1080i60 - 745.496 Mbps

• 720p60 - 663.552 Mbps

• D1 (30fps NTSC or 25 fps PAL) - 124.415 Mbps

• CIF (30 fps) - 36.49536 Mbps

• QCIF (15 fps) - 4.56192 Mbps

Therefore, processors that can decode four 1080i60 H.264 channels are required to support buses over 4 Gbps, assuming a bus utilization of 60%.

2.2 Processing Performance The processing performance required for video processing on the H.264 channel of a programmable processor depends on a number of parameters, including resolution, bit rate, image quality, and video clip content. This chapter will not only discuss the factors that affect cycle consumption, but will also give the rule of thumb for the average cycle consumption of common application examples.

Like other video standards, H.264 only defines the decoder algorithm. For a given encoded media stream, all decoders can generate the same YUV video domain data.

Therefore, the decoder does not determine the image quality, but is determined by the encoder. However, the encoder quality can affect the decoder's cycle consumption.

The period consumption of the entropy decoder depends on the type of entropy decoder and the bit rate. H.264 MP/HP defines two lossless algorithms for the entropy decoder, namely context adaptive binary arithmetic coding (CABAC) and context adaptive variable length coding (CAVLC). CABAC provides a higher compression ratio, so image quality is better when the number of bits is the same, but about 25% more cycles per media stream bit than CAVLC. The amount of period required to decode a CABAC or CAVLC media stream is a non-linear monotonic function of the number of bits.

The processing load of all other decoder functions is a function of resolution. Higher resolution requires more cycles, almost linearly related to the total number of macroblocks. Video stream content, encoder algorithms and tools can affect the decoder's cycle consumption to some extent. Appendix A – Decoder Performance Dependency lists encoder algorithms and tools that can affect decoder cycle consumption.

Encoders that implement a given bit rate on a programmable device require a trade-off between quality and processing load. Appendix B – Motion Estimation and Bit Rate Control analyzes two encoder algorithms that can affect the quality of the encoder and consume a large number of cycles.

For high-quality video streams of typical sports consumer electronics, the following list of rules of thumb can be used to determine the number of cycles consumed by H.264 encoders in common use cases.

• QCIF resolution, 15 fps, 128 Kbps - 27 million cycles per channel • CIF resolution, 30 fps, 300 Kbps – 200 million cycles per channel • D1 resolution, NTSC or PAL, 2 Mbps – 660 million per channel Cycles • 720p resolution, 30 fps, 6 Mbps – 1.85 billion cycles per channel • 1080i60, 60 fields per second, 9 Mbps – 3.45 billion cycles per channel

Similarly, the number of cycles consumed by the H.264 decoder is:

• QCIF resolution, 15 fps, 128 IKbps – 14 million cycles per channel • CIF resolution, 30 fps, 300 Kbps – 70.5 million cycles per channel • D1 resolution, NTSC or PAL, 2 Mbps – 292 million per channel Cycle • 720p resolution, 30 fps, 6 Mbps – 780 million cycles per channel • 1080i60, 60 fields per second, 9 Mbps – 1.66 billion cycles per channel

The number of cycles consumed by the transcoding application (including the complete decoder and encoder) is the sum of the encoder and decoder consumption, plus the overhead consumed if needed.

2.3 Memory Considerations A trade-off between cost and memory requirements is an important consideration in any hardware design. When analyzing the memory requirements of a multicore video processing solution, you need to identify the following issues:

• How much memory does it require, and what type of storage (proprietary or shared) is it?

• Is the speed of the storage sufficient to support the traffic demand?

• Is the speed of the access bus sufficient to support the traffic demand?

• Can the memory architecture support multicore access with minimal multicore performance loss?

• Does the memory architecture support the input and output of processor data streams with minimal data conflicts?

• What are the existing hardware that supports memory access such as DMA channels, DMA controllers, prefetch mechanisms, and fast intelligent cache architectures? The amount of memory required for the memory depends on the application. The following three application examples describe three different memory requirements:

- Wireless transmission rate: Conversion from QCIF H.264BP to QCIF with very low latency in a single-motion estimation reference frame. H.264BP requires sufficient storage capacity to store 5 frames. Each frame requires 38,016 bytes, so the memory required for one channel (including the storage of input and output media streams) is less than 256 KB per channel. Processing 200 channels simultaneously requires 50MB of data storage.

- Multi-channel decoder application example: For the H264 HP 1080p decoder, if the number of B-frames between two consecutive P-frames and I-frames is equal to or less than 5, then we only need to store enough storage for 7-8 frames. Space, so the amount of storage required for a single channel (including storing input and output media streams) should be less than 25MB per channel. Processing 25 channels simultaneously requires 125MB of data memory.

- Example of high quality video stream with live TV broadcast: H.264HP 720P60 encoding for live TV programs requires 600MB per channel when there is a 7 second delay in the system at FCC requirements. Processing two channels in parallel requires 1.2GB of data storage.

In order to maximize the low cost advantage of video processing systems, data must reside in external memory, the size of which needs to be selected based on the worst application state of the system memory. At the same time, the processed data must be stored in internal memory to support the high throughput of the processor. The optimized system uses ping-pong to move data from external memory to internal memory, while moving data from memory to external memory while processing data from internal memory. A typical processor has a small L1 memory that can be configured as a cache or RAM, a larger dedicated L2 memory for each core (configurable as cache or RAM), and access to each core in the processor. The shared L2 memory can be used. To enhance the ping-pong mechanism, multiple independent DMA channels are required to read and write data from external memory.

Appendix C External Memory Bandwidth - To support the ping-pong mechanism for the three application examples above, the bandwidth required to move data from external memory to internal memory is estimated. The effective bandwidth of the external memory must be greater than 3.5 Gbps.

2.4 Multi-core Collaboration and Synchronization When multiple cores process the same video channel, these cores must communicate with each other to synchronize, separate, or share input data, combine output data, or exchange data during processing. Appendix A - Decoder Performance Dependencies Describes several algorithms for dividing video processing into multiple cores.

Parallel processing and pipeline processing are two commonly used partitioning algorithms. An example of parallel processing is that two or more cores can handle the same input channel. There must be a mechanism that is not affected by race conditions to share information between multiple cores. Signals can be labeled to protect the global area from race conditions. The hardware needs to support blocking and non-blocking semaphores to effectively eliminate race conditions, which eliminates the possibility of two cores occupying the same memory address at the same time.

If a pipeline algorithm is used, one or more cores can execute the first part of the operation, and then pass the intermediate result to the second set of cores to continue processing. Since the video processing load depends on the content of the processing, this delivery mechanism may face the following problems:

• If more than one core processes the first stage of the pipeline, then the N+1th frame may be processed before the Nth frame. Therefore the delivery mechanism must be able to sort the output/input.

• Even though the cores on the pipeline are generally balanced (in terms of processing load), individual frames are not necessarily the case. The delivery mechanism must provide buffering between different pipeline stages so that the kernel does not complete work without affecting other kernel stall waits.

• If the algorithm requires tight links between the two phases of the pipeline (for example, to resolve dependencies), the mechanism must be able to support tight links and loose links.

2.5 Multi-chip systems that process Super Video (SVGA), 4K and higher resolution in real time, or handle Level 5 H.264 HP may require more than one chip to work together. To build a two-chip system with ultra-high processing power, it is important to have an ultra-fast bus that can connect two chips.

The third section describes the KeyStone family of DSP architectures that meet all of the above requirements and challenges.

3. KeyStone DSP – TI's latest multi-core processor

The TI KeyStone architecture describes a wide range of multi-core devices that require high performance and high bandwidth, such as video processing. Figure 2 provides a general description of the KeyStone DSP. This chapter describes the KeyStone DSP features for the video processing hardware requirements described in Part 2.

Figure 2 KeyStone DSP block diagram

| characteristic New C6x kernel - 8 new C6x DSP cores with 1GHz frequency - floating point processing capability - Performance: 256 GMAC, 128 GFLOPS Memory - 32 KB L1P&L1D per core - Each core is equipped with 512 KB local L2 - 4MB shared L2 memory Group accelerator Switch structure and EDMA3 Peripheral - with Ethernet converter 2x SGMII (Data/Control) – 4x SRIO at 5 Gbps – 2x PCIe , 2x TSIP – 16/32/64b DDR3 – EMIF-16 , SPI, I2C, UART, GPIO System monitor - JTAG - Embedded Trace Buffer - Trace Port Equipment specification table - Power: 7.5 W at 75 C, 9 W at 105 C - Package size: Target 24x24 - 40 nm pin process - The number of pins is 841 (full array) - Core voltage: Using SmartReflex technology, 1V at 1GHz; 0.9V at 800MHz. |

Multi-core video requirements | KeyStone 's features fully meet the requirements |

External I/O Interface - Compressed Video Ethernet Interface | Two SGMII 1G ports support a high bitrate Ethernet interface for packetized compressed video. As described in the second section, typical HD video requires a rate of up to 10 Mbps to allow the Ethernet interface to support multiple compressed video channels. In addition, KeyStone DSPs have a packet accelerator hardware subsystem that supports multiple IP addresses and can share the packet processing load for each core. |

External I/O Interface - Raw Data Interface | The KeyStone DSP has two standard PCI Express lanes. Assuming a bus utilization of 60%, each channel needs to carry 5G bytes, which is enough to transfer 4 to 5 channels of 1080i60 in the YUV domain, 24 channels of D1, or more than 300 channels of QCIF 30 fps. In addition, the KeyStone DSP has four SRIO channels, each of which can transmit 5G bits, resulting in four times the connection performance of the previous 60% bus utilization. |

External I/O Interface - Voice Processing | Two Telecom Serial Interface Ports (TSIPs) provide sufficient bandwidth (supporting 2/4/8 channels per channel with 32.768/16.384/8.192 Mbps and up to 1024 DS0) to support voice processing associated with video applications . |

Processing capacity | The first release of the KeyStone DSP has eight cores with a clock frequency of 1.25G, which provides 8G cycles. Eight features work in parallel and can perform 64G operations per second (floating point, fixed point, and data movement). In addition, the KeyCtone DSP's new C66x core supports all instructions in the TI C64x+ DSP core, all instructions in the TI C67x core core, and other instructions that include several SIMD instructions that support four arithmetic operations and two arithmetic operations. The number of theoretical operations for the type of operation vector processing is 128G or even 256G. These SIMD instructions can significantly improve the efficiency of vector processing video processing algorithms such as motion estimation, conversion and quantization algorithms. |

Memory Considerations - On-Chip Memory | Each core has 32KB of L1 data memory and 32KB of L1 program memory. Each can be configured as a pure RAM or L1 cache, or a combination of both. Each core has 512KB of L2 dedicated memory, up to 256KB of which can be configured as a four-channel L2 cache. In addition, the KeyStone DSP also has 4MB of shared L2 memory. |

Memory Considerations - External Memory | Supports up to 8GB of DDR3 in modes of 1 ×16, 1 ×32 and 1 ×64, with frequencies up to 1600 MHz providing up to 12.8GB raw bit rate per second. |

Memory Considerations - Multi-core shared memory controller | 2 × 56 bits are directly connected to the External Memory Interface (EMIF) DDR. 2 × 256 bits are directly connected to each DSP. Multiple prefetch media streams for programs and data. |

Memory Considerations - DMA | Ten transmit controllers and 144 Enhanced Direct Memory Access (EDMA) channels enable efficient resource utilization by reading and writing data from external memory. |

Synchronization and global collaboration between kernels | Full hardware supports 64 independent blocking and non-blocking beacons, supporting both direct and indirect requests. |

Tight and loose links between the kernel, data and message transfer | The Multicore Navigator is a hardware queue manager that controls 8,192 queues and has 6 channelized DMA channels that can transmit messages. The Navigator supports data and messages being passed between tight or loosely linked kernels. In addition, navigators can help improve the efficient ordering of data from multiple sources to multiple destinations. |

Fast bus connecting two chips | The four-channel hyperlink bus provides up to 50 Gbps per channel with a transfer rate of up to 50 Gbps. |

Table 1 KeyStone DSP and video processing requirements (Part I, two parts)

Multi-core video requires KeyStone's features to meet the requirements of an external I/O interface - a compressed video Ethernet interface. Two SGMII 1G ports support a high bit rate Ethernet interface for packet-compressed video. As described in the second section, typical HD video requires a rate of up to 10 Mbps to allow the Ethernet interface to support multiple compressed video channels. In addition, KeyStone DSPs have a packet accelerator hardware subsystem that supports multiple IP addresses and can share the packet processing load for each core.

External I/O Interface - Raw Data Interface KeyStone DSP has two standard PCI Express channels. Assuming a bus utilization of 60%, each channel needs to carry 5G bytes, which is enough to transfer 4 to 5 channels of 1080i60 in the YUV domain, 24 channels of D1, or more than 300 channels of QCIF 30 fps. In addition, the KeyStone DSP has four SRIO channels, each of which can transmit 5G bits, resulting in four times the connection performance of the previous 60% bus utilization.

External I/O Interface - Voice Processing Two Telecom Serial Interface Ports (TSIPs) provide sufficient bandwidth (at 32.768/16.384/8.192 Mbps per channel and up to 1024 DS0 for 2/4/8 channels) Support for voice processing related to video applications.

Processing Capabilities The first release of the KeyStone DSP has eight cores with a clock frequency of 1.25G, which provides 8G cycles. Eight features work in parallel and can perform 64G operations per second (floating point, fixed point, and data movement). In addition, the KeyCtone DSP's new C66x core supports all instructions in the TI C64x+ DSP core, all instructions in the TI C67x core core, and other instructions that include several SIMD instructions that support four arithmetic operations and two arithmetic operations. The number of theoretical operations for the type of operation vector processing is 128G or even 256G. These SIMD instructions can significantly improve the efficiency of vector processing video processing algorithms such as motion estimation, conversion and quantization algorithms.

Memory Considerations - On-Chip Memory Each core has 32KB of L1 data memory and 32KB of L1 program memory. Each can be configured as a pure RAM or L1 cache, or a combination of both.

Each core has 512KB of L2 dedicated memory, up to 256KB of which can be configured as a four-channel L2 cache. In addition, the KeyStone DSP also has 4MB of shared L2 memory.

Memory Considerations - External Memory Supports up to 8GB of DDR3 in modes of 1x16, 1x32 and 1x64, with frequencies up to 1600 MHz providing up to 12.8GB raw bit rate per second.

Memory Considerations - Multi-core shared memory controller 2 × 56 bits are directly connected to the External Memory Interface (EMIF) DDR.

2 × 256 bits are directly connected to each DSP.

Multiple prefetch media streams for programs and data.

Memory Considerations - DMA

Ten transmit controllers and 144 Enhanced Direct Memory Access (EDMA) channels enable efficient resource utilization by reading and writing data from external memory.

Synchronization and global collaboration between cores The hardware supports 64 independent blocking and non-blocking beacons, supporting both direct and indirect requests.

Tight and loose links between cores, data and message transfers The Multicore Navigator is a hardware queue manager that controls 8,192 queues and has 6 channelized DMA channels that can transmit messages. The Navigator supports data and messages being passed between tight or loosely linked kernels. In addition, navigators can help improve the efficient ordering of data from multiple sources to multiple destinations.

Fast bus connecting two chips The four-channel hyperlink bus provides a transfer rate of up to 50 Gbps per channel at 12.5 Gbps.

Appendix A - Decoder Performance Correlation The tools and algorithms used in the encoder and the video content all affect the performance of the decoder. The following factors will affect the decoder performance:

• Choice of CABAC or CAVLC Entropy Decoder • Number of frame skipping • Complexity of intra prediction mode • Prediction type – motion estimation or intra prediction. (The number of decoding cycles that motion compensation needs to consume is different from intra prediction compensation. Whether motion compensation or intra prediction is used depends on the encoder.)

• Different motion estimation tools (one motion vector per macroblock, 4 motion vectors per macroblock, or 8 motion vectors per macroblock) can change the complexity and number of cycles of the decoder.

• Motion compensation for the B-frame macroblock involves two reference macroblocks and consumes more cycles.

• The amount of motion in the media stream not only changes the number of skipped macroblocks, but also changes the processing requirements of the decoder.

• The allocation of bitstreams between various values, such as motion vectors, module values, flags, etc., depends on the content of the media stream and the encoder algorithm. Different allocations will change the number of cycles of the entropy decoder accordingly.

Appendix B – Motion Estimation and Rate Control Motion estimation is a large part of H.264 coding. The quality of the H.264 encoder depends on the quality of the motion estimation algorithm. The number of cycles required for motion estimation depends on the functional characteristics and characteristics of the motion estimation algorithm. Here are a few of the main factors that affect the consumption of the motion estimation cycle:

• Frequency of I, P, and B frames • Number of reference frames in L0 (for P and B frames) and L1 (for B frames) • Number of search areas • Size of search area • Search algorithm

A good motion estimation algorithm may consume 40-50% of the total coding period, or even more.

The rate control algorithm is the main factor affecting the quality of the code. To maximize the perceived quality of the video, an intelligent rate control algorithm can allocate the available bits between the macroblock and the frame.

Some systems can implement multiple processing channels to better allocate available bits between macroblocks. Although multiple channels improve perceived quality, they require more intensive processing.

Appendix C - External Memory Bandwidth Due to the motion estimation algorithm, encoders typically require higher internal bandwidth than decoders. The encoder requirements are calculated in two cases: low bit rate QCIF and high bit rate 1080p.

• Case 1 - QCIF 264 BP Encoder:

Two complete QCIF frames can reside in the cache or L2 ping-pong buffer. Each frame requires less than 40 KB. When encoding a frame with a reference frame, the system shall transmit 80 KB of data for each QCIF processing and output a small amount of data. The total internal bandwidth required for 200 15 fps QCIF channels is:

80KB * 15 (fps) * 200 (number of channels) + 200 (number of channels) * 256/8 KB (output bit rate of QCIF channel) = 240MB + 6.4MB = 250MB/s

• The second case -- 1080p 60 H.264 HP:

Assuming a worst case algorithm to perform motion estimation of a motion reference frame, the reference frame may need to be moved from external memory to internal memory up to three times. In addition, advanced algorithms using up to four reference frames can also be assumed. Therefore, the motion estimation for a single 1080p60 channel is:

3 (copy 3 times)* 1920*1080*1 (only 1 byte per pixel in motion estimation) *60 (fps) * 4 (reference channel) = 1492.992 MBps

Whether to move the current frame that can be processed and motion compensated is determined by the following conditions:

2 (current frame and motion compensation)* 1920 * 1080 * 1.5 (bytes/pixel) * 60 = 373.248 MBps

In summary, the above two summary results specify the output bit stream. The sum of one channel is 1866.24 MBps, which means that the two H.264 HP 1080p60 encoders are 3732.48 MBps, which means approximately 30% of the raw data bandwidth of the external memory.

Military battery has a wide application on some high-tech euquipment including military communications equipment, submarine, drone , warship, government and so on .

The design for Military Battery Pack is more strict than normal lithium or Lipo Battery packs ,because it must meet some tough requirements as ;

1, high-level safety : military Lithium Battery was demanded to provide high-level safety and will not cause any death or accident with High intensity impact and and hit.

2, high reliability: the Military Battery must be reliable and could not causing any problems to the equipments during operating .

3, High environmental adaptability: the military Battery Pack is demanded to use in different temperature ,air pressure and other enviornmental condition.

Military Batteries : Ni-Mh Battery Pack BB-390/U

Besides ,the military always have a strict standard on low power self-consuming and deep life cycles .so these above standard has made it special from the other Lithium Battery Pack or other battery packs which applied to life .

Military Batteries

Military Batteries,Military Battery,Military Vehicle Battery,Military Solar Battery

YFJ TECHNOLOGY (HK) CO.,LIMITED , http://www.yfjpower.com