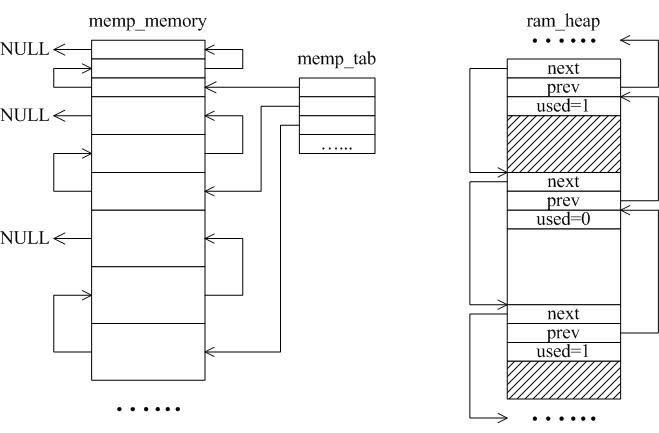

LWIP, a lightweight TCP/IP stack, offers two primary memory management techniques: memory pools (memp) and memory heaps (mem). As illustrated in Figure 1, these mechanisms serve different purposes and are optimized for specific use cases. Memory pools are pre-allocated sets of fixed-size blocks organized as linked lists, making them efficient for fast allocation and deallocation without fragmentation. However, their fixed size requires careful estimation during setup.

In contrast, the memory heap manages a larger, dynamically allocated block of memory that can accommodate variable-sized allocations. While more flexible, this approach introduces complexity due to potential fragmentation and the need for search and merge operations during deallocation. The choice between the two depends on the application's requirements and system constraints.

Figure 1: Memory Pool vs. Memory Heap

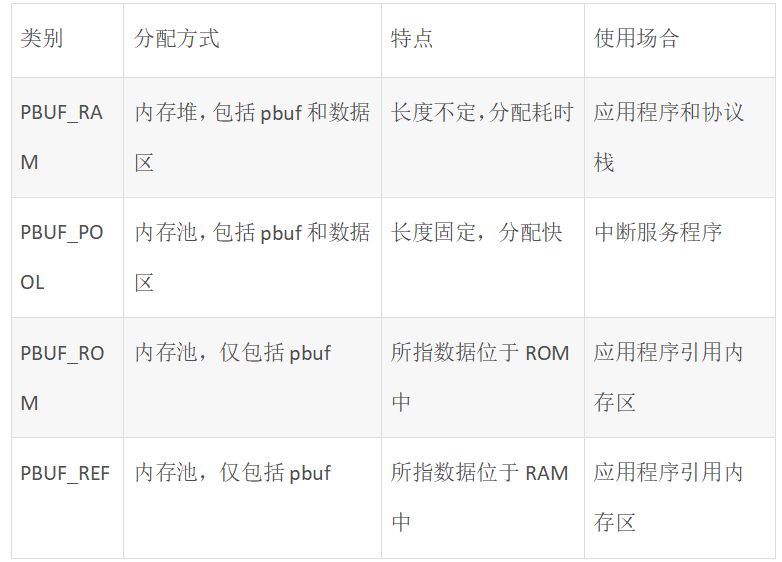

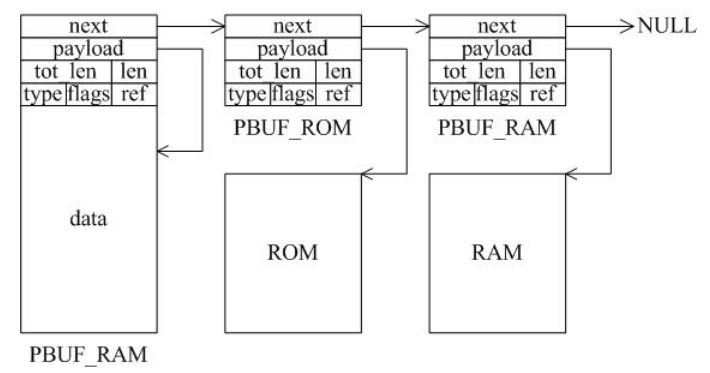

Packet management in LWIP is handled through pbuf structures, which come in four types with distinct characteristics. These structures allow for efficient handling of data packets, especially when dealing with fragmented or multiple data segments. Table 1 outlines the types and their respective uses.

Table 1: PBUF Types and Characteristics

Each pbuf type allocates memory differently, as shown in Figure 2. This distinction is crucial for optimizing performance, especially in resource-constrained environments.

Figure 2: Four PBUF Management Structures

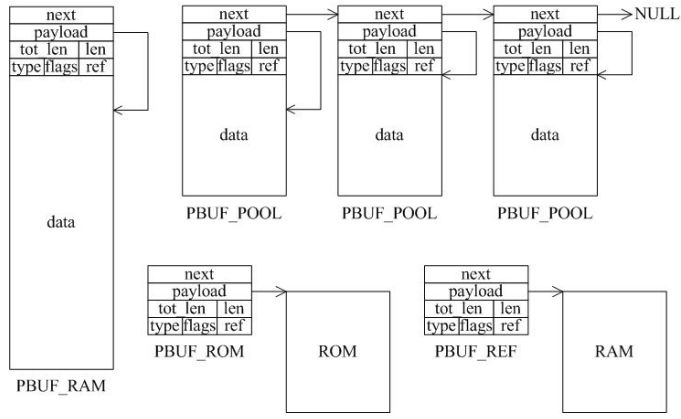

Data packets may consist of multiple pbufs connected via a linked list, as seen in Figure 3. This allows for efficient handling of large or fragmented data while maintaining flexibility.

Figure 3: PBUF Linked List

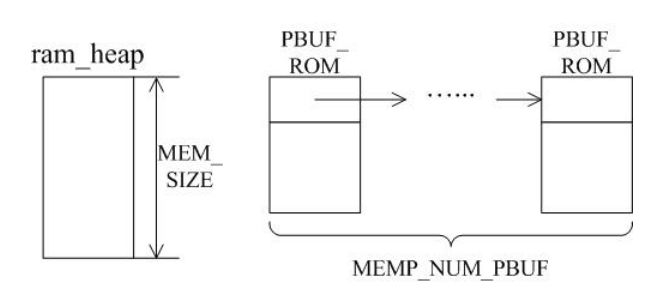

Configuring memory size is essential for optimal LWIP performance. It’s recommended to dedicate a separate memory heap for LWIP so that its mem_alloc() and mem_free() functions operate independently from other system memory. The macro MEM_SIZE in lwipopt.h defines the heap size, and for heavily loaded systems, a larger value is necessary.

Figure 4: Heap and PBUF_ROM Memory Area

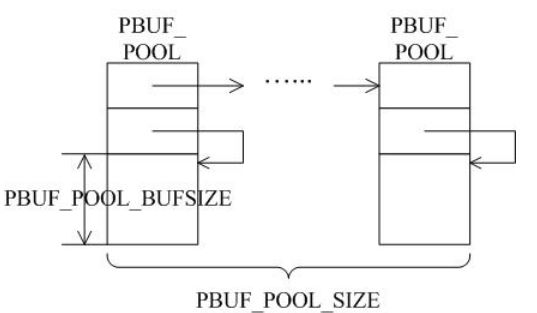

LWIP uses PBUF_ROM memory pools to handle read-only data, such as static content stored in ROM. The macro MEMP_NUM_PBUF controls the number of buffer pools available. In interrupt service routines, where quick memory allocation is critical, PBUF_POOL buffers are used. Two macros—PBUF_POOL_SIZE and PBUF_POOL_BUFSIZE—define the pool size and individual buffer size, respectively.

Figure 5: PBUF_POOL Memory Area

LWIP also provides several compile-time macros to control its behavior. For example, setting MEM_LIBC_MALLOC=1 enables the use of standard C library functions like malloc and free, while MEMP_MEM_MALLOC=1 disables the internal memory pool implementation, relying instead on the heap. Similarly, MEM_USE_POOLS=1 allows for custom memory pools, requiring the user to define them in lwippools.h.

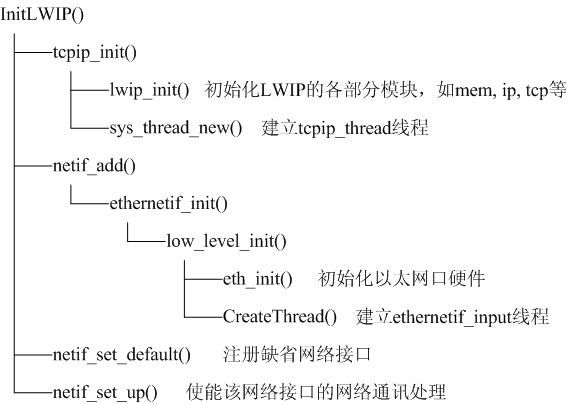

The LWIP startup process involves initializing the Ethernet interface and creating two threads: tcpip_thread handles most of the protocol stack tasks, while ethernetif_thread manages incoming packets. This separation ensures efficient and real-time processing of network traffic.

Figure 6: LWIP Startup Function

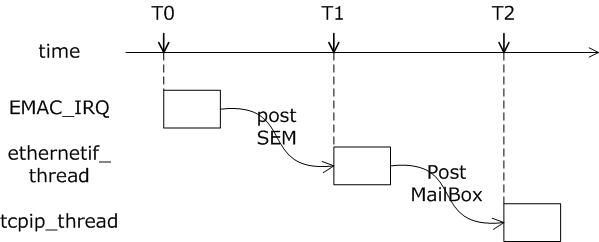

Once running, LWIP processes incoming packets by notifying the ethernetif thread via a semaphore. The packet is then passed to the tcpip_thread for further processing. This message-based architecture ensures scalability and responsiveness, especially in multi-threaded environments.

Figure 7: Receiving a Packet

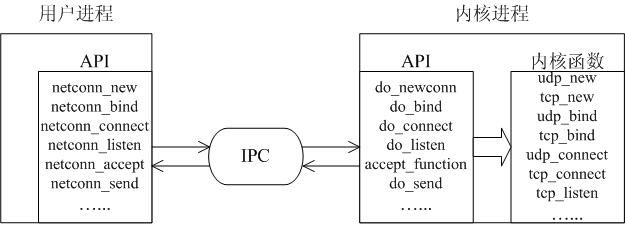

API calls in LWIP are designed to offload computation and data handling to the user application, allowing the protocol stack to focus on communication. This design improves system efficiency and real-time performance. Communication between threads is managed using IPC mechanisms like mailboxes, semaphores, and shared memory.

Figure 9: Protocol Stack API Implementation

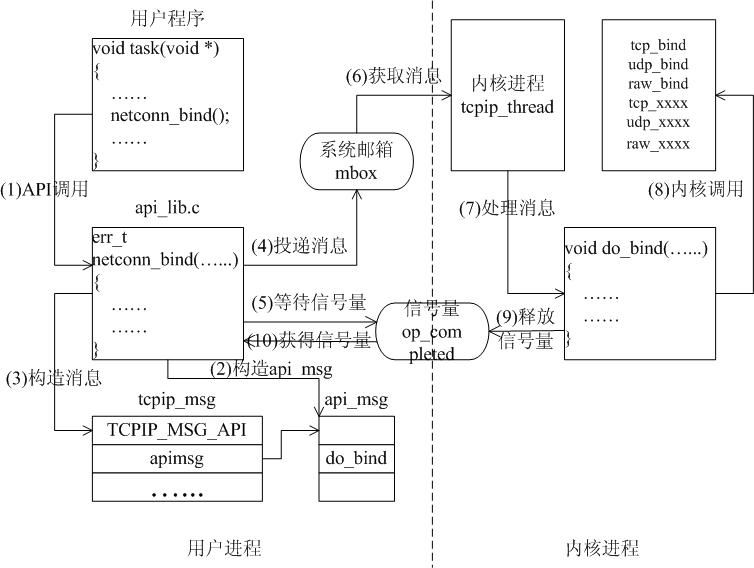

For example, when a user calls netconn_bind(), it sends an API message to the kernel process, which then executes the corresponding function. This asynchronous model ensures that the application remains responsive while the protocol stack performs background tasks.

Figure 10: API Function Implementation

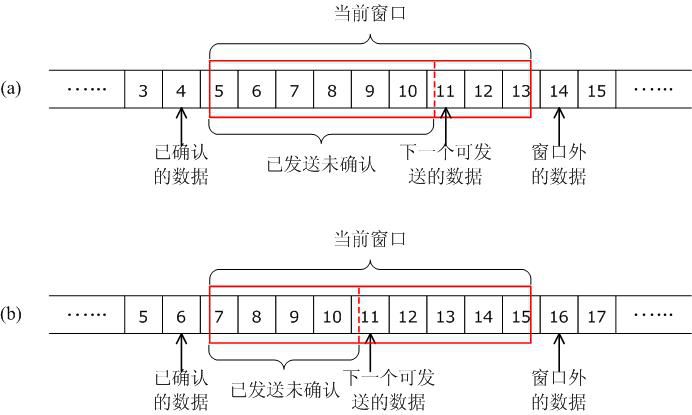

TCP/IP core concepts such as sliding windows, three-way handshakes, and state transitions are fundamental to understanding how LWIP manages network communication. The sliding window mechanism helps regulate data flow, ensuring reliable transmission even under varying network conditions.

Figure 11: Sliding Window

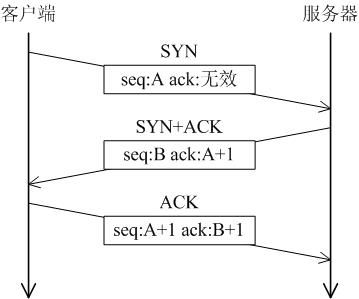

The three-way handshake establishes a connection between devices, ensuring both sides agree on the parameters before data transfer begins. Similarly, the connection termination process follows a well-defined sequence to ensure graceful shutdown of the communication session.

Figure 14: Connection Establishment Process

Proper usage of LWIP is key to stability and performance. Common issues include incorrect configuration of memory sizes, improper use of API functions, and failure to manage resources effectively. Ensuring that the system has enough memory and that all allocated resources are properly released prevents crashes and memory leaks.

Frequently asked questions often revolve around driver compatibility, memory leaks, and connectivity issues. For instance, if a PC cannot establish a TCP connection with LWIP, it may be due to the EMAC not supporting short packet filling, causing the handshake packet to be dropped. Enabling this feature resolves the issue.

2.54mm Pitch

2.54mm Pitch

HuiZhou Antenk Electronics Co., LTD , https://www.atkconn.com